🌍 The EU's Groundbreaking AI Act: A Leap into the Future of AI Regulation 🚀

📜 A Brief Overview

The spotlight in this week's AI news shines on the EU AI Act. The European Union has secured a provisional agreement on the eagerly awaited Artificial Intelligence Act. Termed a "historic" step by EU Commissioner Thierry Breton, this draft regulation is not just a set of rules; it's a catalyst for EU startups and researchers to spearhead the global AI race. The crux of this proposed regulation? To guarantee that AI systems within the EU market are not only safe but also adhere to fundamental rights and embody EU values.

🤖 Understanding the Legislative Approach

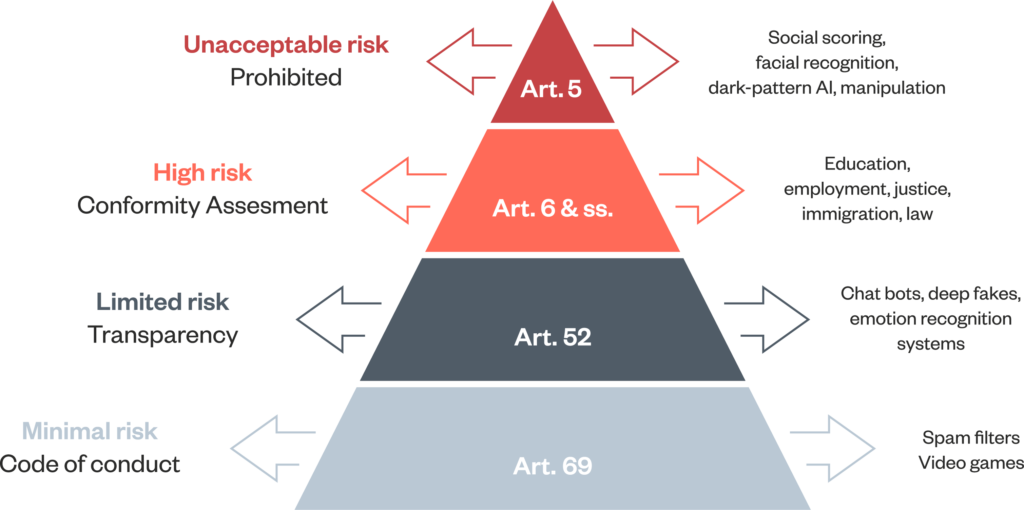

At the heart of the AI Act is a 'risk-based' strategy. AI systems are categorised based on their potential societal harm, with a simple mantra: the higher the risk, the stricter the rules. This approach makes it a horizontal legal framework, applicable across various sectors and technologies.

🎚️ Risk Categories Explained

- Minimal Risk AI: Think AI-enabled recommender systems or spam filters. These enjoy a 'free-pass', exempt from regulatory obligations.

- Limited Transparency Risk: Requires clear labelling of AI interactions, especially for deep fakes, chatbots and AI-generated content. Systems must also transparently indicate the use of biometric categorisation.

- High-Risk AI: These must meet stringent requirements, including human oversight, robustness, and cybersecurity. Examples include AI in critical infrastructure like water and energy, medical devices and emotion recognition systems.

- Unacceptable Risks: AI designed for manipulative purposes like social scoring, predictive policing and real-time biometric identification (in public spaces) will face outright bans.

🏢 Institutions and Oversight

The establishment of a European AI Office within the European Commission is supposed to ensures EU-wide coordination. An AI Board, with representatives from member states, will act as a coordination and advisory platform, alongside an advisory forum involving stakeholders from various sectors.

🚫 What's Excluded?

The AI Act will not regulate AI systems used solely for military/defence purposes, research and innovation, or by individuals for non-professional reasons.

📛 High-Risk AI Applications Facing Bans

The Act prohibits AI applications like behavioural manipulation, untargeted facial image scraping, emotion recognition in workplaces and educational settings, social scoring, and predictive policing.

🏗️ AI and Innovation

The Act encourages innovation, particularly for smaller companies, through regulatory sandboxes and support actions. This 'sandbox' approach allows real-world testing of AI systems under specific conditions.

Nevertheless, as reported by the Financial Times, French President Emmanuel Macron has expressed concerns that this new EU legislation on artificial intelligence might impede innovation and the competitive edge of European tech firms against their rivals in the US, UK, and China

⏳ Timeline for Implementation

The AI Act is set to be enforced two years post its official entry into force, with some provisions having earlier application dates.

🌐 Setting a Global Standard?

Mirroring GDPR's global influence, the AI Act could become the new benchmark for AI regulation worldwide. It's been over two years since the initial proposal in April 2021, marking a significant milestone in AI governance.

🚨 Penalties for Non-Compliance

Companies failing to adhere to the Act's rules could face hefty fines, calibrated to their global annual turnover or a fixed amount, ensuring a proportional impact.

💡 Transparency and Fundamental Rights

A fundamental rights impact assessment is mandatory before deploying high-risk AI systems. Increased transparency is mandated, especially for systems like chatbots and AI-generated content, which must be clearly labelled as AI-generated.

👥 Insights from Industry Experts

In pursuit of a deeper understanding, I engaged with Diana Spehar, Sky's former Data Ethics Lead and a prominent advocate for responsible AI. She emphasises the importance of evaluating the AI Act's practical implementation approaches.

"Assessing the implementation is key to gauging the advocacy and credibility of EU AI regulation. The challenge lies in ensuring regulatory bodies and systems keep pace with rapid innovation. It's crucial to have empowered institutions with explicit standards that can collaborate effectively with the private sector and developers, ensuring AI safety." - Diana Spehar, Data Ethics Leader and Responsible AI Advocate

🚀 The Road Ahead

As we await the formal adoption of the AI Act, the focus shifts to its real-world application and its potential ripple effects on AI innovation and governance globally.

📚 Further Reading

For those interested in delving deeper into the EU AI Act, the following resources offer detailed insights and perspectives:

- BBC News: World Europe - A comprehensive overview of the EU AI Act, its implications, and global impact.

- European Commission Press Release: Commission Press Corner - Official statements and key highlights from the European Commission on the AI Act.

- Council of the European Union: Press Releases - Insights into the collaborative effort between the Council and the European Parliament in shaping the AI Act.

- Initial Commission Proposal (April 2021): Council Document - Access the initial proposal by the European Commission from April 2021 for a historical perspective on the AI Act's development.